The past year has been a whirlwind of innovation in the realm of AI-generated music.

April saw the debut of the first AI viral sensation, ghostwriter’s “Heart on My Sleeve,” proving that AI-created music could not only exist but also captivate.

Google’s reveal of MusicLM, a tool that conjures up songs from simple prompts; Paul McCartney’s use of AI to reincarnate John Lennon’s vocals for a new Beatles track; Grimes’ proposition to split streaming royalties for songs using her AI-clone voice; and notably, Meta’s decision to make MusicGen open-source, transforming text into quality music samples, have all fueled the rapid evolution of music creation tools. This particular move has led to a burst of innovative apps leveraging the model for music creation.

Generative music is poised to blur the boundaries between artist, listener, producer, and performer, reminiscent of the transformative impacts of instruments, recorded music, synthesizers, and samplers. By simplifying the journey from concept to creation, AI is set to empower a broader spectrum of individuals to engage in music production, enhancing the creative potential of existing artists and producers.

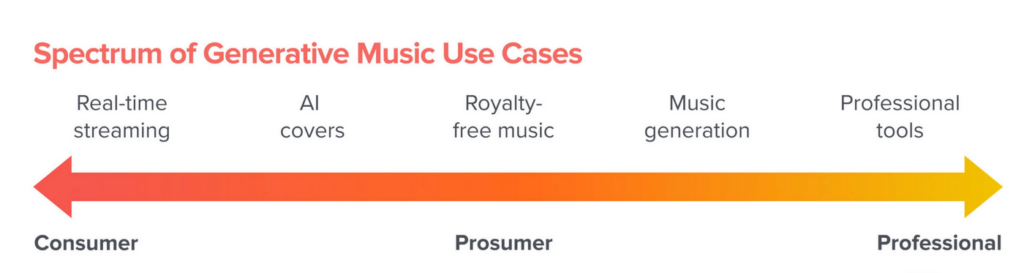

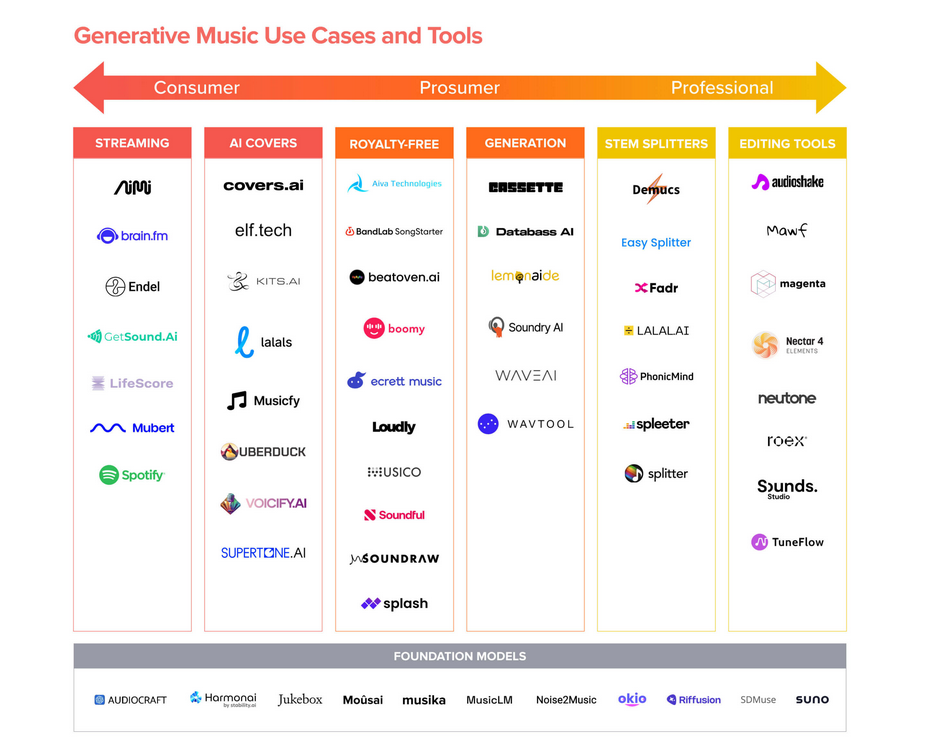

This exploration will delve into current applications of AI in music, speculate on future directions, and highlight pioneering companies and technologies reshaping the industry. For clarity, we’ve organized the discussion around five primary use cases, though categorizing these innovations by target audience—from everyday users to professional creators with commercial ambitions—provides additional insight.

Streaming Music Reimagined: The Advent of Generative AI Platforms

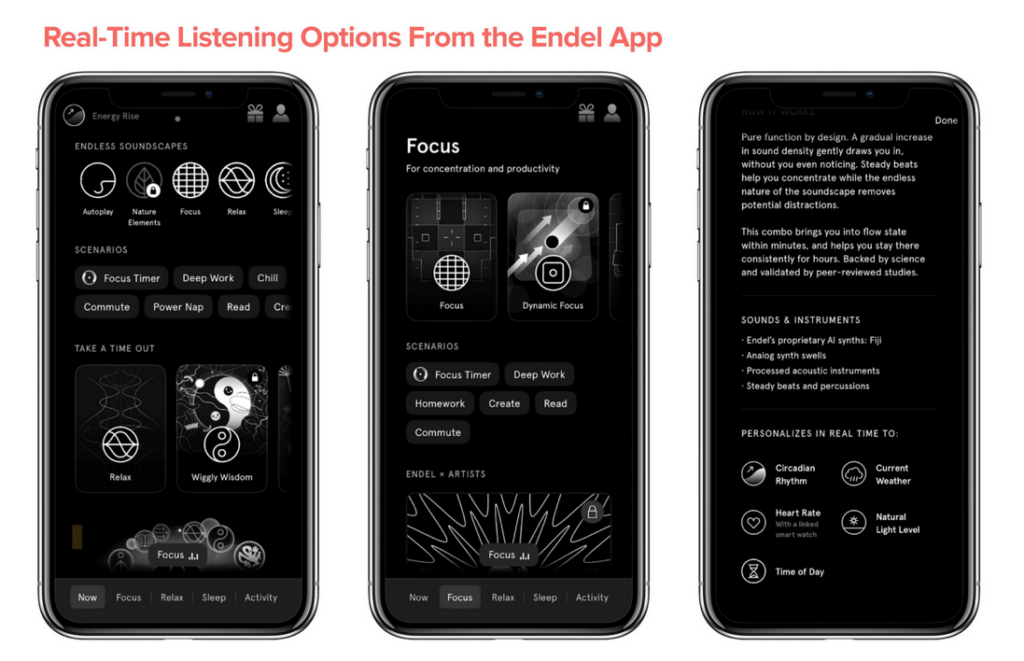

So far, the vanguard of generative streaming innovations has primarily settled in the domain of functional music, through platforms like Endel, Brain.fm, and Aimi. These platforms curate endless playlists designed to transport you into specific states of mind or moods, altering dynamically with the time of day and your ongoing activities. (It’s worth noting, though, that the realm of functional music is beginning to merge with mainstream tunes, as seen with major labels like UMG teaming up with generative music pioneers like Endel to craft “functional” iterations of hit tracks.)

Within the Endel app, the auditory experience distinctively shifts from “deep work” to “relaxation” modes. Endel’s collaborations with artists to generate albums based on their artistic essence further exemplifies this trend.

To date, the focus in the streaming landscape has largely been on crafting ambient soundscapes or background ambiance without incorporating vocals. Yet, the prospect of AI-driven streaming services that can also produce conventional music complete with AI-synthesized vocals isn’t far-fetched. Reflecting on how recorded music popularized the long play album, one could foresee generative models introducing “endless tracks” as a novel music format.

The intrigue deepens when considering interfaces that don’t rely solely on textual prompts. Imagine a system that tailors to your musical tastes based on genre or artist preferences, or even by analyzing your listening history without needing explicit instructions. Consider the possibility of a platform that syncs with your calendar, offering the ideal “pump up” playlist ahead of significant events.

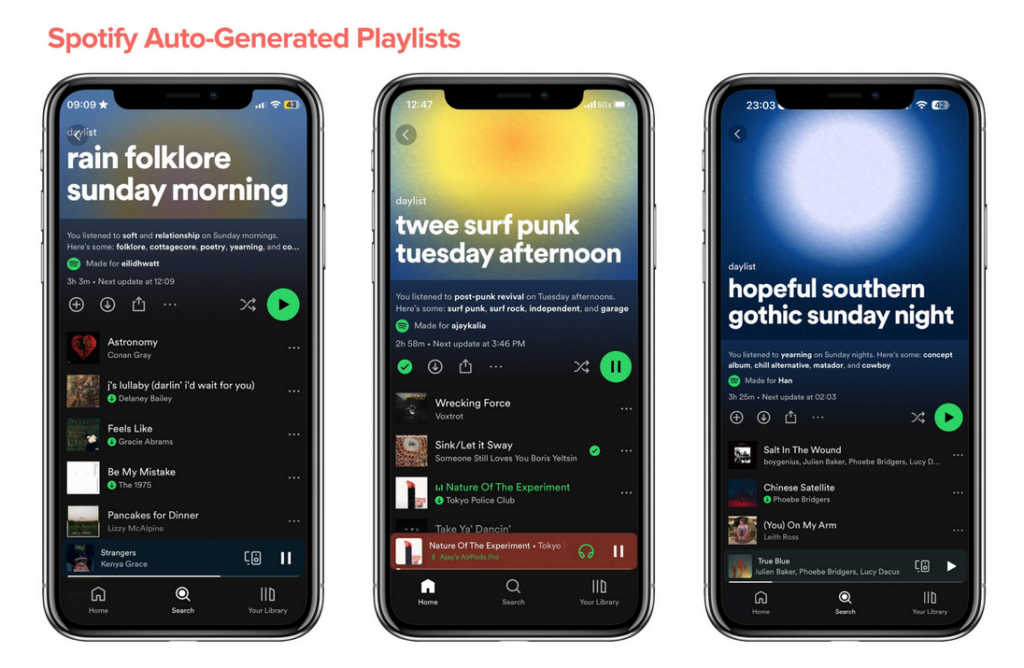

Spotify is already advancing towards personalized, automatically generated playlists. In February, they introduced an AI DJ feature, providing a tailored selection of music with commentary, based on your recent and all-time favorites and fine-tuning the selection according to your reactions. This month, they launched “Daylist,” an auto-updating playlist that adapts several times daily to match your habitual listening patterns at particular moments.

While Spotify’s current innovations stop short of generating new music, focusing instead on curating playlists from existing tracks, the ultimate incarnation of this technology would likely blend AI-created and human-produced music, soundscapes, instrumentals, and full songs.

The Revolution of AI-Produced Covers

The phenomenon of AI-generated covers has become the breakout success story in the realm of AI music. Since the release of “Heart on My Sleeve” in April, the AI cover scene has exploded, with the hashtag #aicover amassing over 10 billion views on TikTok alone.

This surge in popularity originated largely within the AI Hub Discord community, which boasted over 500,000 members before its closure in early October due to ongoing copyright infringement disputes. Despite these legal gray areas, which continue to pose challenges, the community has since fragmented into smaller, more secretive groups dedicated to the training and sharing of voice models for particular characters or artists. Many have adopted retrieval-based voice conversion techniques to morph spoken or sung clips into the voice of another, with some enthusiasts going as far as publishing guides on model training and cover creation, alongside links to their own trained models for public use.

Utilizing these models at home calls for a degree of tech know-how, prompting the emergence of browser-based solutions like Musicfy, Voicify, Covers, and Kits that aim to simplify the process. These platforms generally require the upload of a vocal clip for transformation, though the advent of text-to-song capabilities is already in sight, with platforms such as Uberduck paving the way for rap and beyond.

Yet, the overarching challenge for AI-generated covers remains the maze of legal rights. Navigating this landscape is crucial for those immersed in the AI music domain.

The legal quandaries echo past technological shifts, notably the sampling controversies that marked the early days of hip hop. The initial legal battles of the 1990s eventually led to a broader recognition that mutual economic agreements for sampling could benefit all parties involved, creatively and financially. This realization prompted record labels to establish specialized teams for sample clearance, and even inspired Biz Markie’s ironically titled album “All Samples Cleared.”

Amid the unease some labels and artists feel towards AI music, there lies a vein of opportunity — the prospect of earning passive income from creations utilizing their voice, without lifting a finger. A prime example is Grimes, who launched Elf.tech to allow the production of new tracks using her voice, committing to share royalties from any AI-generated song that turns a profit.

The development of a supportive infrastructure is anticipated to facilitate these innovations on a larger scale. Artists are in need of platforms where they can host their unique voice models, track the usage of their AI covers, and oversee the streams and financial yield of their tracks. Additionally, the ability to experiment with voice models for testing lyrics, assessing how a voice melds with a song, or exploring collaborations, is an enticing prospect for artists and producers alike.

The Democratization of Music Creation with AI

In the realm of creating content for YouTube, podcasts, or any business-oriented video project, sourcing royalty-free music is a common hurdle. Despite the existence of stock music libraries, navigating them can be a hassle, and the cream of the crop tracks are often all too familiar. This scenario has given rise to a genre often joked about for its ubiquity yet royalty-free nature: the unremarkable “muzak” or “elevator music.”

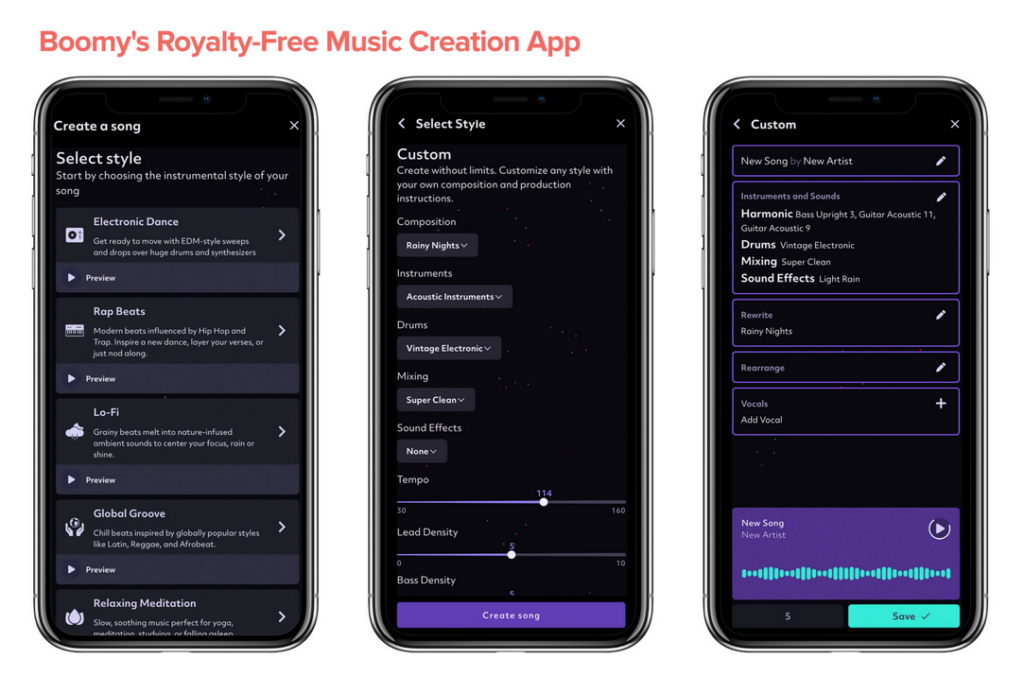

However, the landscape is shifting with the advent of AI-generated music. Tools such as Beatoven, Soundraw, and Boomy are revolutionizing how we generate unique, royalty-free music. These platforms offer the ability to select a genre, mood, and energy level, then craft a new track based on these preferences. They even offer customization options like tempo adjustment, instrument modification, and note rearrangement for fine-tuning.

The trajectory for royalty-free music seems to be veering towards a future dominated by AI creations. As the genre becomes more commoditized, envisioning a backdrop where AI crafts all background music isn’t far-fetched, eliminating the longstanding compromise between cost and quality.

Initially embraced by individual creators and small to medium businesses, these AI music tools are poised for an upmarket shift. This includes both traditional enterprise sales to larger entities such as gaming studios and integration into content creation platforms through APIs.

Music Creation’s New Frontier

The intersection of large-scale models with music unlocks remarkable opportunities for bedroom producers and other prosumer segments, even those without formal music training, to produce music of a professional caliber. Innovations to watch include:

- Inpainting: Filling out a musical phrase from a few played notes.

- Outpainting: Predicting the continuation of a song section into the next bars, a feature MusicGen supports with its “continuation” setting.

- Audio to MIDI: Transforming audio into MIDI, capturing nuances like pitch bend and velocity, as seen in Spotify’s Basic Pitch offering.

- Stem separation: Breaking down a song into its components such as vocals, bassline, and drums through technologies like Demucs.

Future music production could look something like this:

- Select a legally clearable song to sample.

- Separate its stems and convert a segment to MIDI.

- Input a few synthesizer notes, employing inpainting to complete the phrase.

- Use outpainting for additional phrase extensions.

- Utilize generative tech for track composition, mimic or expand upon studio musicians’ work, and finalize the track in a desired style.

Emerging software products are focusing on parts of the production stack, like generating samples (Soundry AI), melodies (MelodyStudio), MIDI files (Lemonaide, AudioCipher), or even mixing (RoEx).

The integration of multimodal models that can interpret music and other audio inputs is crucial, catering to those who may not have the lexicon to describe sought-after sounds. An intertwined future of hardware and software is anticipated, including “generative instruments” like DJ controllers and synthesizers that incorporate these advancements directly.

Professional Tools Reimagined

We now turn our attention to a burgeoning category of AI music products designed for use by music producers, artists, and labels. While termed “professional,” these tools are also accessible to indie and amateur creators.

They range widely in complexity, use cases, and how they fit into traditional production workflows. We can categorize them into three main groups:

- Browser-based applications focusing on singular creation or editing tasks, usable outside conventional production software. Tools like Demucs, Lalal, AudioShake, and PhonicMind specialize in stem splitting.

- AI-enhanced virtual studio technologies (VSTs) that integrate into digital audio workstations (DAWs) such as Ableton Live, Pro Tools, and Logic Pro. VSTs like Mawf, Neutone, and Izotope facilitate sound synthesis or processing within a producer’s existing setup.

- Innovations aiming to completely redefine the DAW with an AI-first methodology, making music production more approachable for newcomers and professionals. With many popular DAWs being decades old, ventures like TuneFlow and WavTool aspire to construct a new-generation DAW from scratch.

The Visual Art Analogy in Music

Platforms like Midjourney and Runway have democratized the creation of high-quality visual content, which traditionally demanded expertise in sophisticated, pricey tools. Similar to how graphic designers have started leveraging these generative AI tools for faster workflow and quicker iterations, the music industry is on the cusp of experiencing its own transformation with AI-powered instruments reducing the gap from inspiration to execution to nil.

This anticipated “Midjourney moment” for music, where producing a high-quality track becomes effortlessly achievable for the average consumer, will have profound effects on the music ecosystem. From professional artists to burgeoning consumer creators, the impact will be significant.

Our ultimate vision is a comprehensive tool that takes in textual, audio, visual, or video inputs to understand the desired vibe and themes of a track, facilitating an AI copilot to assist in the songwriting and production process. While we don’t foresee the top charts being dominated by fully AI-generated songs—acknowledging the irreplaceable human element and artist connection in music—we do anticipate AI assistance vastly simplifying the music creation process for many. And that’s a tune we’re excited to hear play out!